Author

Monica Davis

Monica Davis writes about technologies and industry challenges that shape security and edge topics.

How do we give AI a moral consciousness – and is this even possible? What kind of moral and social standards should be established when creating autonomous machines? And who will set these standards? The carmakers, the developers, the governments or the customers themselves?

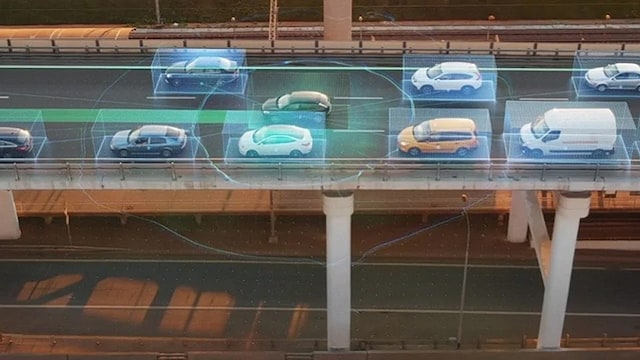

A self-driving car carries a young couple and their newborn home. An elderly woman disregards the pedestrian light and steps suddenly into the road. What will happen next? Will the car swerve to avoid the woman, crashing head on into a tree? Or will the car continue on its programmed path, running over the lady and saving the lives of its passengers? Both scenarios end with a question that has no answer: Who should the car kill?

This “no win” scenario, dating back to 1950´s philosophy known as the “Trolley Problem,” is just one of many that reveal the moral, ethical and social dilemmas confronting us as we give rise to new technologies such as autonomous driving. This scenario raises questions that demand urgent answers, as autonomous cars are already on the move.

Smarter, greener, safer autonomous cars?

At the NXP FTF Connects Silicon Valley, these issues were discussed. Lars Reger, Chief Technology Officer of NXP’s Automotive business unit explained that these questions must be addressed collaboratively to enable the obvious advantages of autonomous cars.

Autonomous cars could help prevent the nearly 1.3 million deaths in road crashes each year, and could have a profoundly positive effect on the environment. According to a research from the Department of Energy (DOE), electrified automated cars could reduce energy consumption as much as 90 percent.

All the advantages of these new vehicles could, in the worst case scenario, never be realized, if one component is missing: trust. “There is no market without the trust of our customers,” said Lars Reger. Trust is key. And it must be established by all industry parties involved.

Moral values or personal interest?

The importance of the trust issue is also illustrated by a newly-published study by MIT Media Lab. According to the scientists, most of the research participants refused the idea of buying a vehicle with unpredictable behavior. Essentially, customers want a car that protects its own passengers at all costs. This becomes a social dilemma, as self-interest risks overpowering moral values; and a technical dilemma too for the industry. How do they design algorithms that reconcile moral values and personal interest?

These challenges are currently being tackled by the the IEEE AI Ethics Initiative which developed Ethically Aligned Design, a go-to resource for technologists to help them pragmatically address issues relating to AI and autonomous systems in the algorithmic era.

IEEE AI Ethics Initiative Executive Director John C. Havens pointed out that while it may be impossible to plan for every imaginable ethical scenario with self-driving cars, now is the time to establish key principles like transparency and accountability for these systems to best innovate the industry while prioritizing human wellbeing.

Ultimately, an autonomous car could be a trusted companion that is directed by its owners’ personal data and values, said Havens. This is a perception also shared by Lars Reger: “In the best case, an ethical autonomous car helps you to avoid danger and enhances your capabilities.”

But who is to establish these rules and moral values? And how will our personal data be used to shape the future of such technologies? The next 10 to 20 years will be a critical time in finding these answers. But one thing is already clear: just like the engine, ethics will become a part of every car.

You might also be interested in:

Tags: ADAS and Safe Driving, Automotive

Marketing Communications Manager at NXP

Monica Davis writes about technologies and industry challenges that shape security and edge topics.

June 19, 2020

by Ross McOuat

July 29, 2020

by Davina Moore

July 30, 2020

by Jason Deal