With rapid advancements in the machine learning (ML) space, it’s almost

dizzying the array of new applications suitable for ML. At NXP, we continue to

grow our ML software solutions to support the ever-expanding market.

Let’s look at some of the latest technology advancements we have made

available within our eIQ machine learning software environment.

All Machine Learning applications, whether for cloud, mobile, automotive or

embedded, have these things in common—developers must collect and label

training data, train and optimize a neural network model and deploy that model

on a processing platform. At NXP, our processing platforms don’t

specifically focus on cloud or mobile, but we are seriously enabling machine

learning for embedded applications (industrial and IoT) and automotive

applications (driver replacement, sensor fusion, driver monitoring and ADAS).

eIQ ML Software for IoT and Industrial

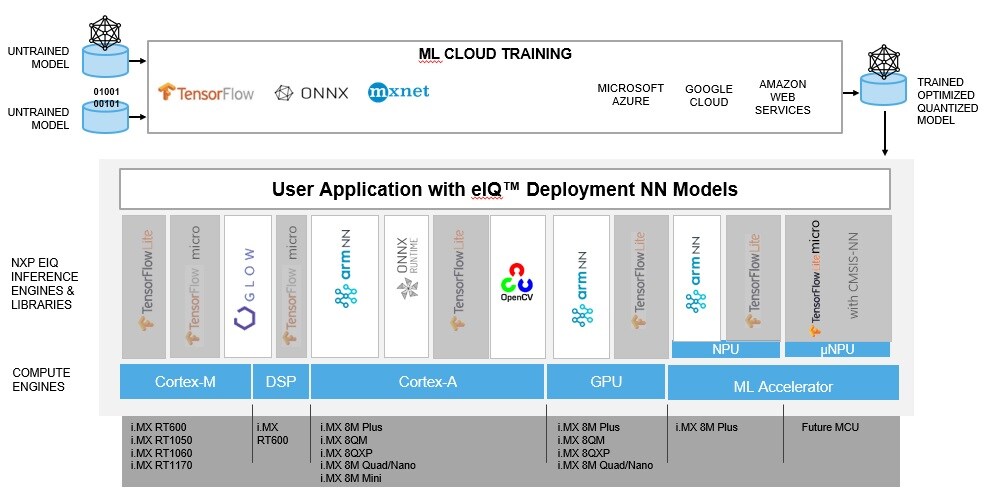

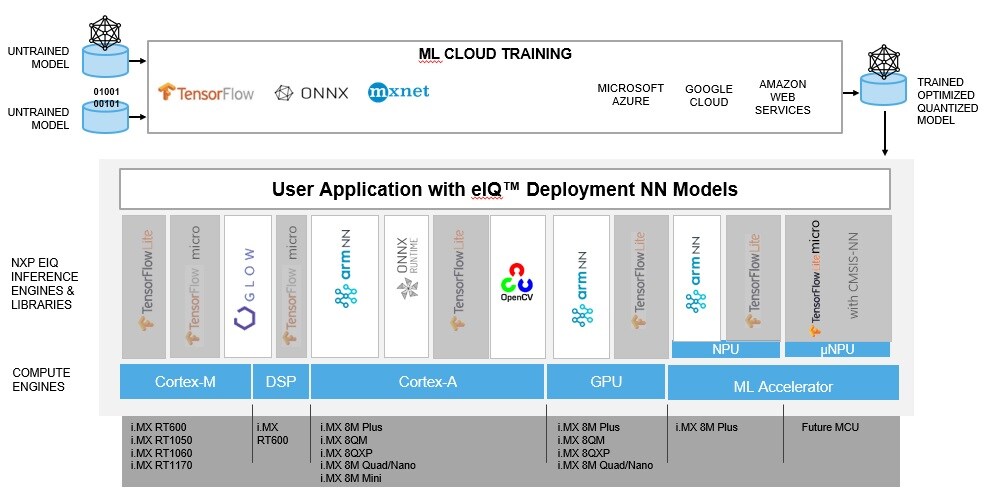

In June 2019, we launched our

eIQ ML Software Development Environment

with the primary goal of optimally deploying open source inference engines on

our MCUs and application processors. Today these engines include TensorFlow

Lite, Arm® NN, ONNX runtime and OpenCV and as Figure 1

depicts, these span across all compute engines in one way or another. And

wherever possible, we integrate optimizations into the inference engines (such

as a performance-tuned backend for TensorFlow Lite), targeted at making our

MCUs and applications processors faster. To facilitate customer deployment, we

include these engines along with all necessary libraries and kernels (for example,

CMSIS-NN, Arm Compute Library) in our Yocto BSPs and MCUXpresso software

development kit (SDK).

Figure 1: eIQ machine learning software development environment

An important part of our support for these open source inference engines is in

the maintenance of version upgrades; whether they are synchronous (for example, Arm

does quarterly releases of Arm NN and Arm Compute Library) or asynchronous

(Google releases TensorFlow Lite versions whenever warranted). In the

fast-moving world of machine learning, these upgrades and feature enhancements

are important and always deliver better performance, support for more neural

network operators (to allow the use of newer models) and other new features.

The release information, which is much too long to list here, is available on

the GitHub pages for

Arm NN

and

TensorFlow.

eIQ Auto

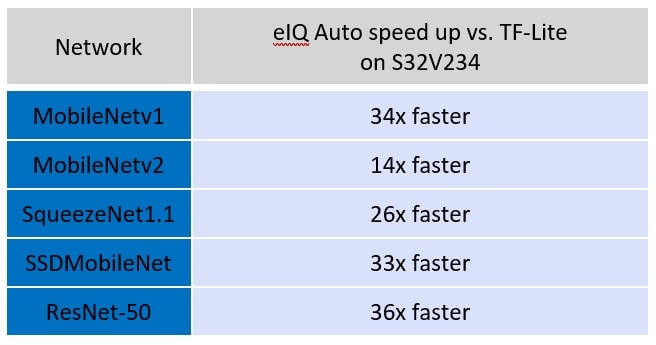

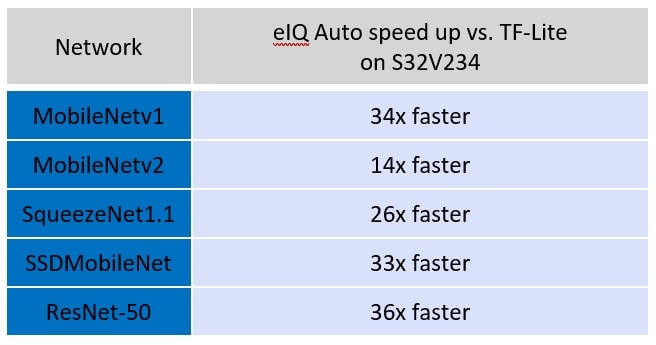

Figure 2: eIQ Auto performance benchmarks

Recently, as machine learning technologies have expanded within NXP, eIQ ML

software has grown to become an umbrella brand representing multiple facets of

machine learning. Further enhancement of eIQ software comes from our

automotive group who recently rolled out the

eIQ Auto

toolkit, providing an Automotive SPICE® compliant deep

learning toolkit for NXP’s S32V processor family and ADAS development.

This technology aligns with our S32 processors offering functional safety,

supporting ISO 26262 up to ASIL D, IEC 61508 and DO 178. The inference engine

of the eIQ Auto toolkit includes a backend that automatically selects the

optimum partitioning for the workload of a given neural network model across

all the various compute engines in the device. The eIQ Auto toolkit also

integrates functionality to quantize, prune and compress any given neural

network. Benchmarks indicate that this combined process leads up to 36x

greater performance for given models compared to other embedded deep learning

frameworks.

Over time, we will roll out updated versions and new releases of eIQ ML

software with added features and functionality to bring increased value to

your machine learning applications. Without unveiling too much detail, new eIQ

ML software features will include tools for model optimization (performance

increase and size reduction) and enhancements to make ML software easier to

use. For NXP, this is the future of machine learning—faster, smaller,

easier to use software with increased functionality—all leading to

widespread industry adoption.