There are wide and varied applications for machine learning; however, few are

as compelling as those in public safety. Recently, I worked with the team from

Arcturus who have been working on methods to provide operational insights and

improve public safety for smart public transportation systems during NXP

Connects in Santa Clara.

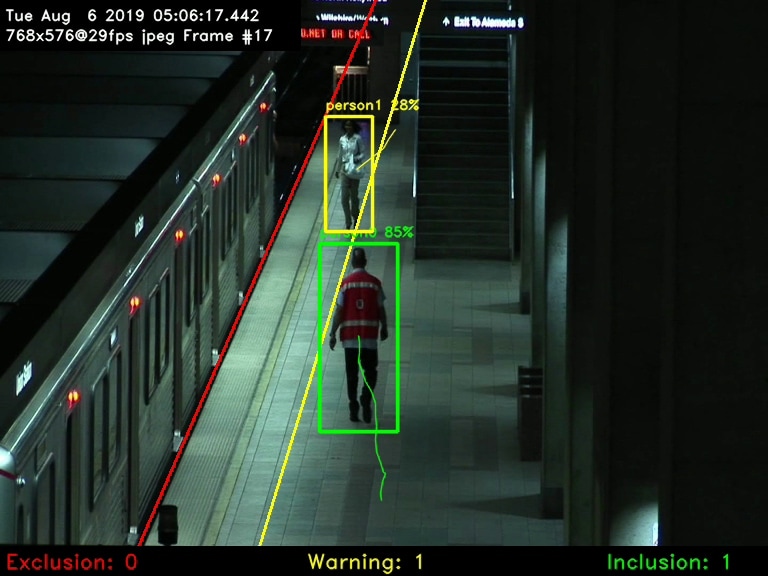

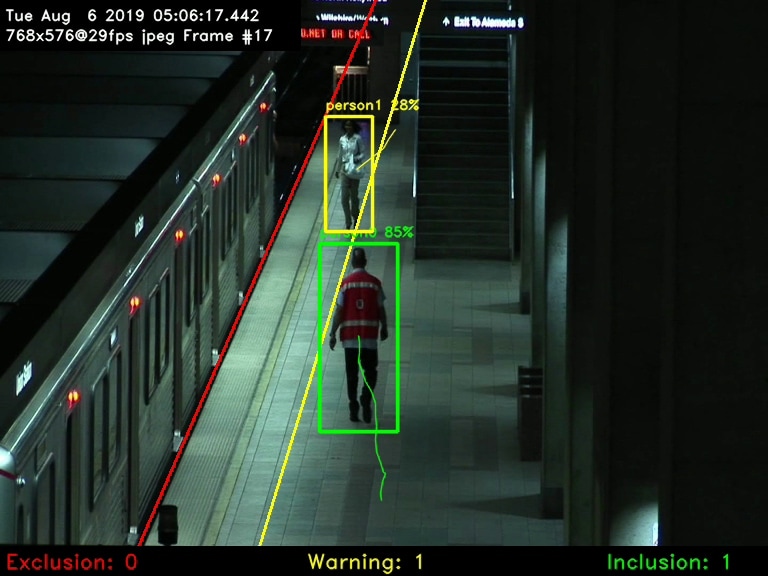

The application that Arcturus focuses on processes video from a security

camera on a subway platform and looks for three conditions;

- how crowded the platform is

- how close to the platform’s edge people are standing

- if there are any abandoned packages or luggage

If one of these conditions are met, the system immediately provides a

notification or performs a local, real-time action—such as shutting

down track power. The real-time response really illustrates the power that

machine learning has to transform a task that would typically be left to human

observation to turn it into active detection.

Built with i.MX 8M Mini

To build the system, Arcturus chose NXP’s quad core

i.MX 8M Mini

applications processor combined with ArmNN to run their neural network

detection algorithm at the edge. For a public safety system like this one,

edge processing improves response time and reliability by eliminating the need

to ship pixel data from each camera across the network and up to the cloud.

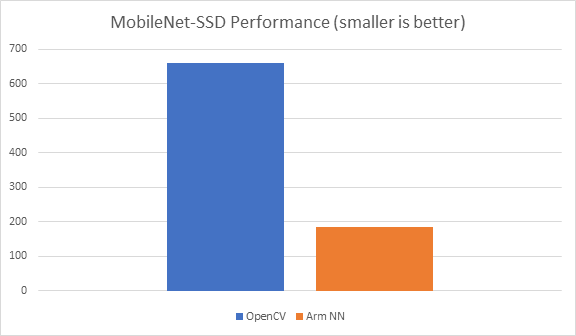

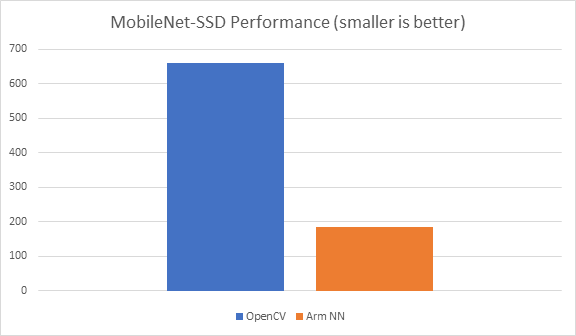

The table below describes the efficiency Arcturus was able to achieve using

Arm NN, when compared to their existing pipeline deployed with

OpenCV—the performance improvement allowed them to run their detection

natively on the four Arm® A53 cores in the i.MX8M Mini, without the need

for specialized ML hardware or even a GPU.

Supported by eIQ Software Development Tool

To help Arcturus port their application from OpenCV to the i.MX 8M Mini, we

let them loose on a pre-production version of our eIQ machine learning

software development tool. It’s important to note that eIQ also

supports OpenCV for machine learning, but we’ll typically recommend

using Arm NN for performance and efficiency reasons. Using eIQ software helped

them rapidly deploy their application in days, rather than weeks without any

direct support from us. From our perspective, this project offered a good

exercise to ensure that eIQ machine learning development environment could

deliver on the promise of a smooth transition from desktop-to-embedded

application and that it offers users full advantage of the processing

available in the i.MX 8M Mini device.

We’re excited to see what Arcturus has forthcoming. They have work

underway to apply ML techniques that use clothing characteristics and

reidentification methods to actively locate people. Therefore, losing your

child at the mall may just become a thing of the past! If you need more

information, contact them at

ArcturusNetworks.

For more information on eIQ and i.MX8M Mini