We’re entering a new era of computing where intelligence lives at the edge—from factory robots to smart vehicles

assisting drivers, entertaining passengers and even helping with homework. Today’s AI must be local, responsive,

efficient and secure to meet the demands of real-time, on-device decision-making.

That’s why NXP is taking a major step forward. On October 27, 2025, we are excited to announce that we have

officially completed

the

acquisition of Kinara, one of the industry’s pioneers in high-performance, power-efficient Discrete Neural

Processing

Units (DNPUs).

Why It Matters

The future of intelligent systems is edge-centric. From predictive maintenance in factories to generative AI in smart

cameras, edge systems are now expected to handle complex inferencing workloads locally without relying on the cloud.

This shift brings compelling benefits: lower latency, improved data privacy, reduced bandwidth costs and enhanced

resiliency. But it also raises the bar for on-device compute performance and energy efficiency.

Meanwhile, the edge AI processing market is growing meteorically as developers seek secure, cost-effective and

power-efficient AI solutions. Omdia projected at the end of 2024 that the market for artificial intelligence

acceleration hardware at the edge, defined to include all compute above the microcontroller class and within 20 ms

network round-trip time from the user, would grow from $43 bn at year-end to $89.7 bn by 20291.

With the acquisition of Kinara, NXP boosts its portfolio with high-performance discrete NPUs and establishes a

scalable

platform for AI-powered edge systems. NXP will benefit from adopting Kinara’s AI engineers, who have extensive

experience in ML hardware, software stacks and application integration. Just as importantly, the acquisition adds

depth

to NXP’s software offering to support customers building production-ready solutions.

Discrete neural processing units reshape edge intelligence. Discover how NXP and Kinara are

advancing local AI in the

official press release to learn more.

The Edge Demands Highly Efficient and Scalable AI

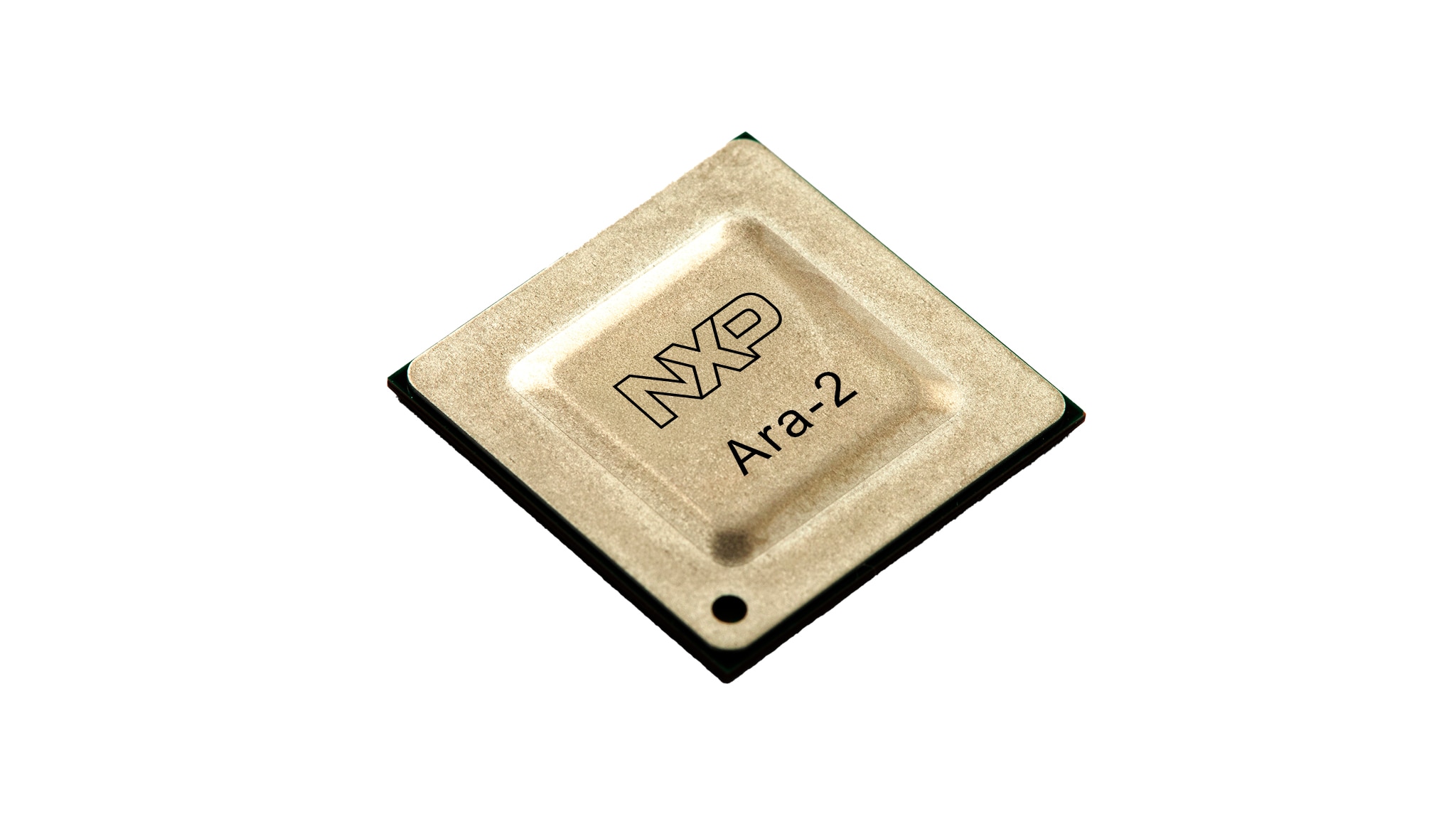

Kinara’s flagship products, Ara-1 and Ara-2, are

discrete NPUs designed to tackle the full spectrum of edge AI

workloads. Ara-1, the first-generation chip, delivers up to 6 eTOPS² and is already shipping in volume across

vision-centric edge use cases. Ara-2, the second generation, is a powerhouse with up to 40 eTOPS² of performance and

optimized specifically for

generative AI, large language models (LLMs) , vision language models (VLMs), vision language action models (VLAs),

agentic AI and system-level acceleration.

With support for both convolutional neural networks (CNNs) and transformer-based architectures, Ara-2 is built for

the

compute and memory bandwidth demands of modern AI. It excels in processing large language models and multimodal

applications, such as combining visual inputs with speech or text for context-aware inferencing.

The Ara-2 enables real-time Generative AI and LLM execution on AI-enabled compute

and embedded systems, delivering low

latency, lower operational costs and enhanced data privacy.

The Ara-2 enables real-time Generative AI and LLM execution on AI-enabled compute

and embedded systems, delivering low

latency, lower operational costs and enhanced data privacy.

The architecture underpinning Ara-1 and Ara-2 features a programmable, RISC-V-based dataflow design that allows

inference engines to be parsed and executed efficiently across proprietary multiply accumulate compute (MAC) units.

This

flexibility means that solutions can adapt as AI algorithms evolve, so engineers can handle today’s workloads and

adjust

to whatever the future has in store.

Why Kinara Complements NXP

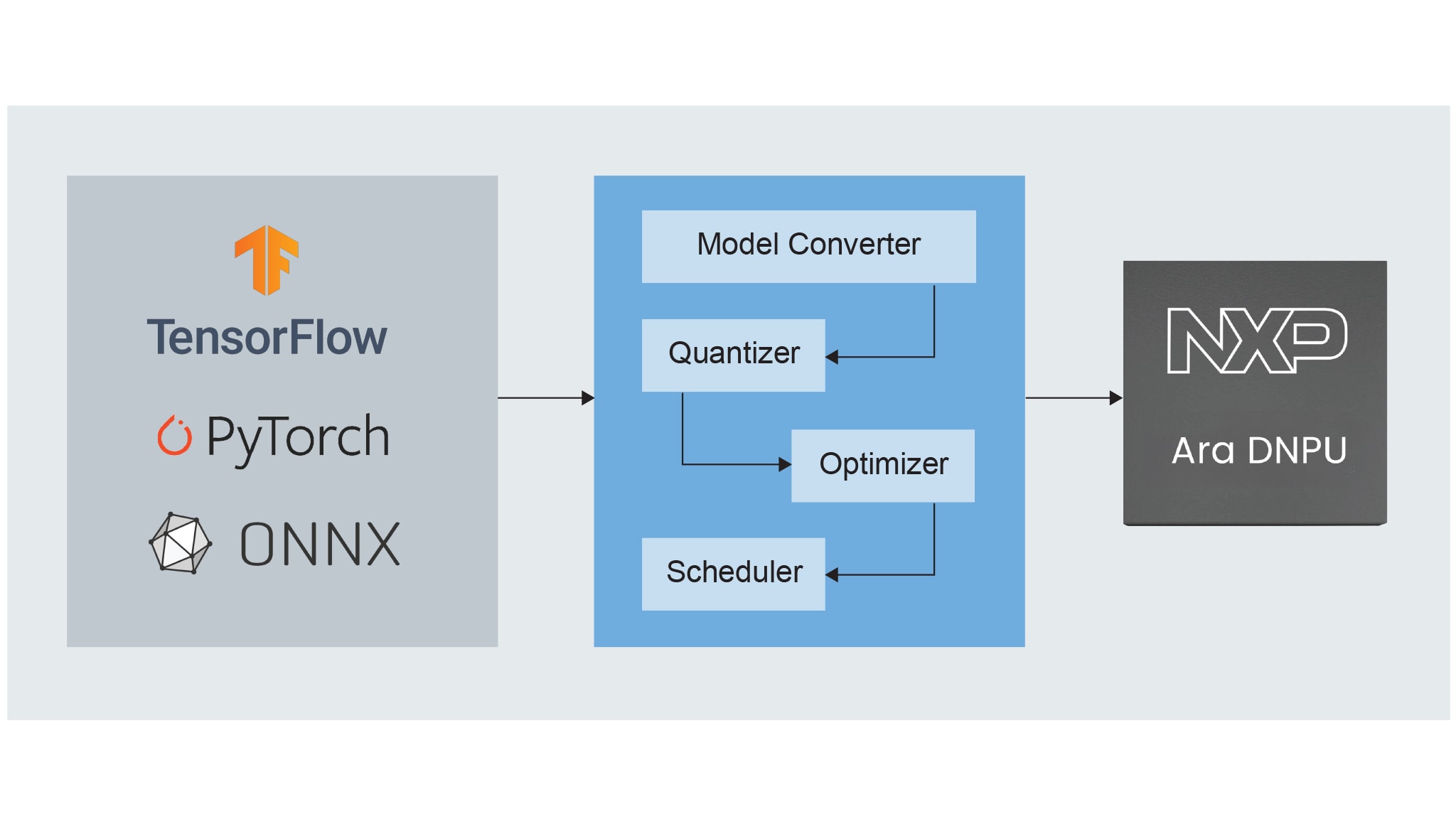

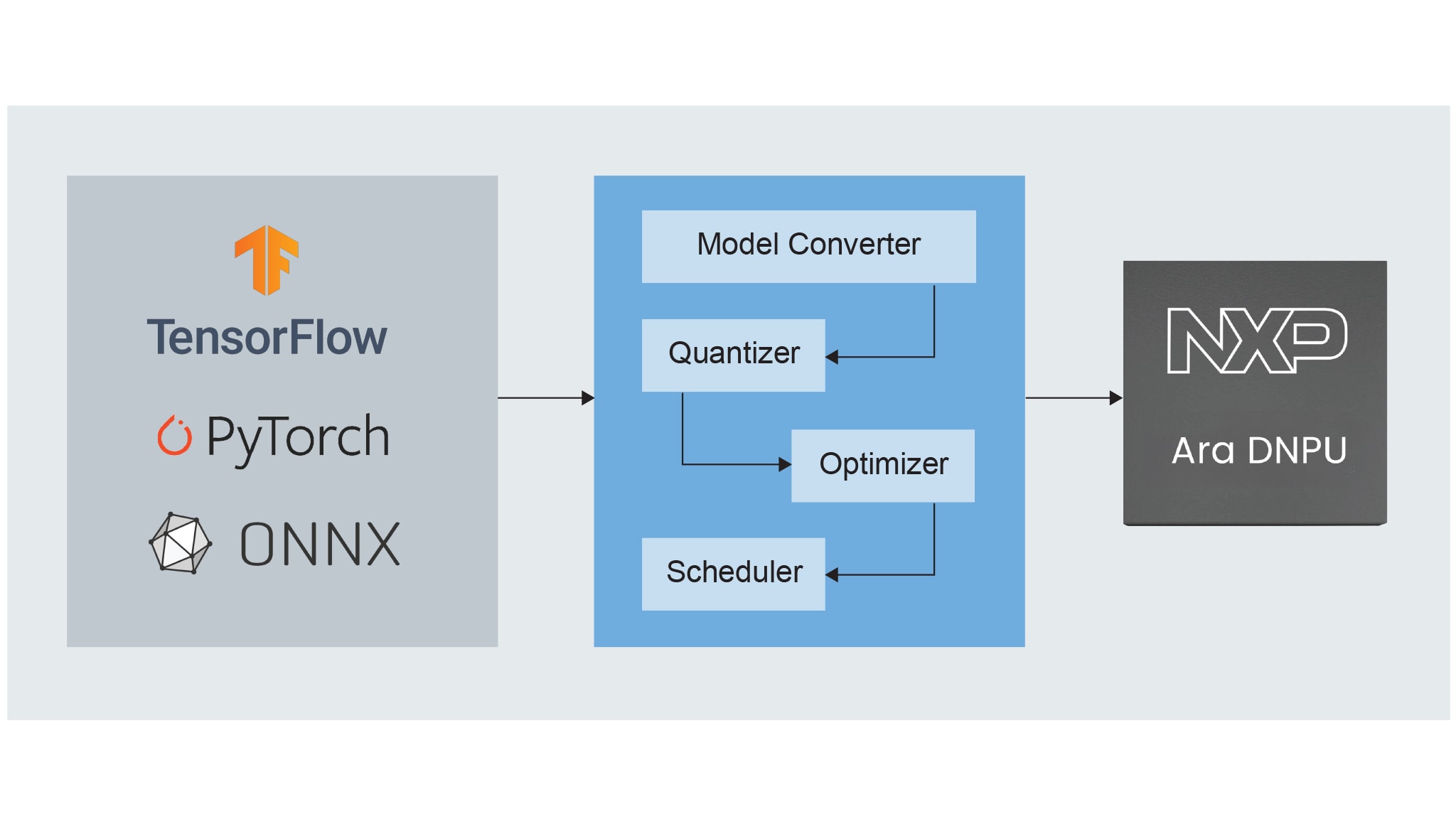

Kinara’s value goes beyond its silicon. Its comprehensive AI software stack includes a software development kit

(SDK),

model optimization tools and an extensive library of preoptimized AI models. The Kinara SDK will be integrated into

NXP’s eIQ® SW development environment so that developers can build, optimize and deploy AI applications across the

combined portfolio with ease.

The comprehensive AI software stack includes a software development kit (SDK), model

optimization tools and an extensive

library of preoptimized AI models.

The comprehensive AI software stack includes a software development kit (SDK), model

optimization tools and an extensive

library of preoptimized AI models. For a better experience, download the

block diagram.

This unified tooling delivers a seamless and simplified user experience for developers. Whether building a smart

camera,

voice assistant, predictive maintenance engine or a multimodal human-machine interface, developers can now tap into

a

one-stop-shop experience. Our lineup of application processors, discrete NPUs, power management, security and

connectivity is all backed by differentiated software support.

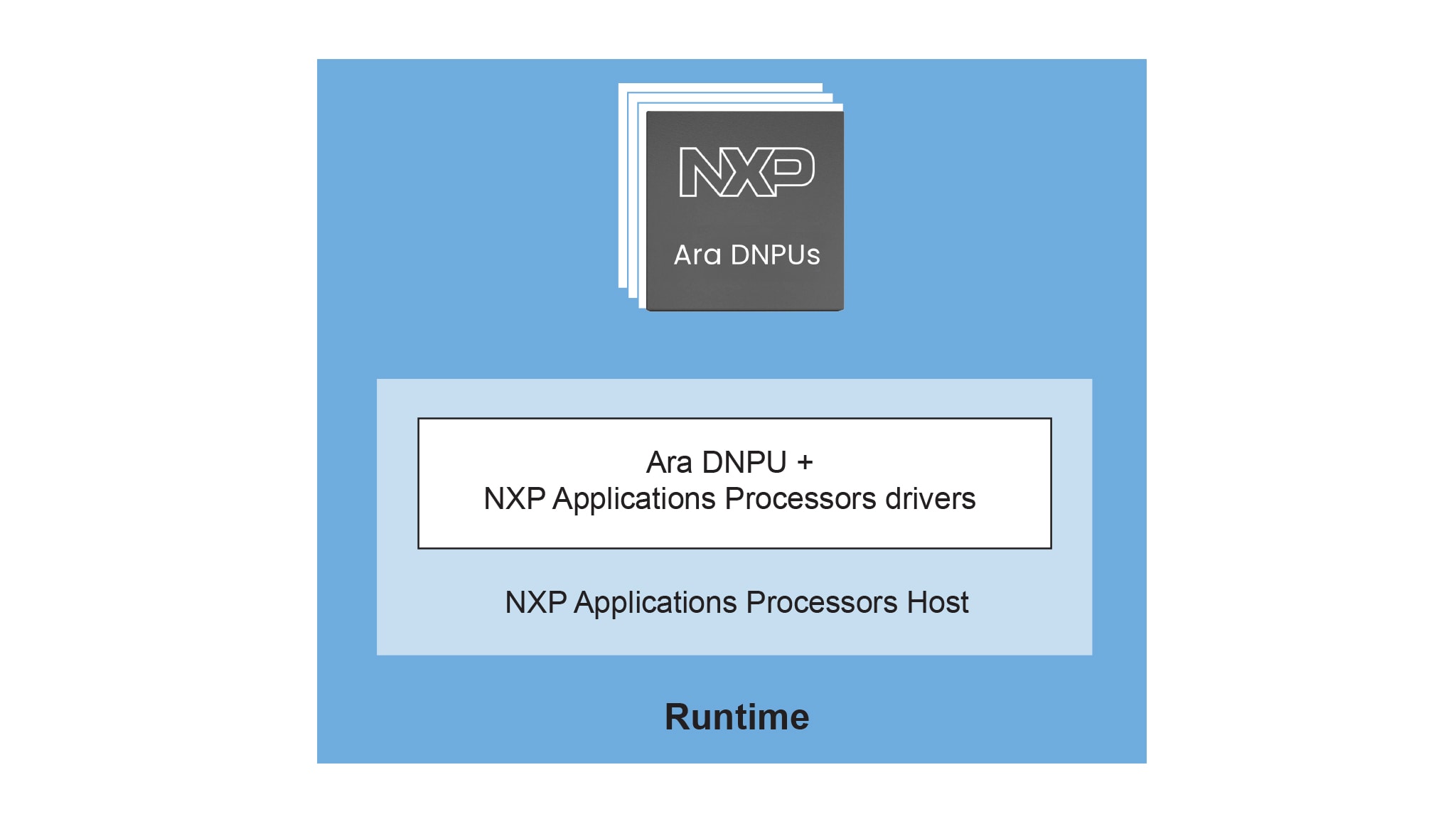

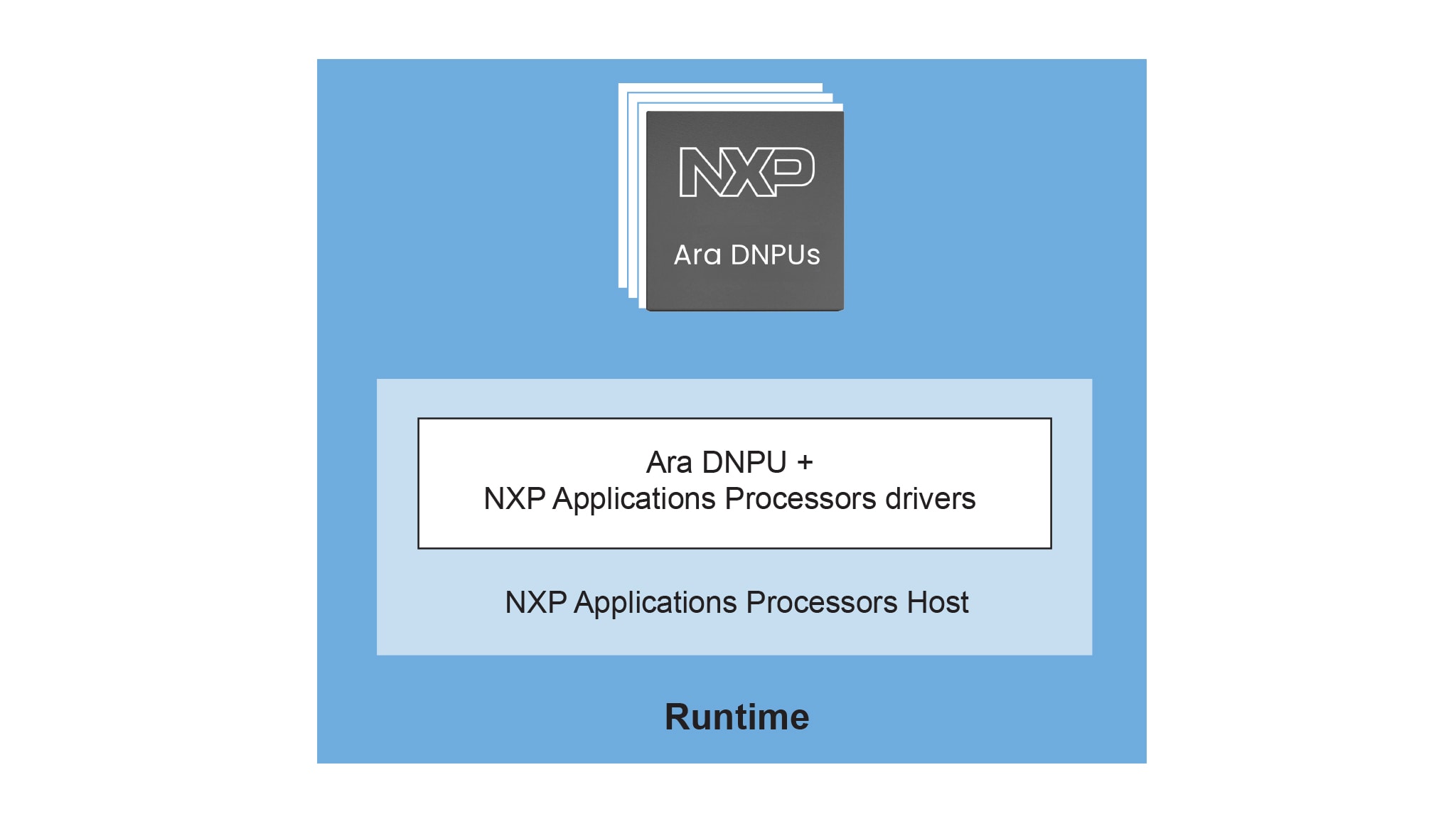

And, Kinara’s Discrete NPUs pair naturally with NXP’s i.MX applications processors (MPUs), like the i.MX 8M Plus and

i.MX 95. In edge AI use cases, DNPU

accelerators will offload heavy inferencing tasks while the MPU can manage

preprocessing, postprocessing and general-purpose compute. Such synergy allows customers to scale AI performance

independently from the application processor and achieve system-level flexibility that meets any cost or power

budget.

The acquisition enhances NXPs ability to provide complete and scalable AI

platforms, from TinyML to generative AI, by

bringing discrete NPUs and robust AI software to NXP’s portfolio of processors, connectivity, security and

advanced

analog solutions.

The acquisition enhances NXPs ability to provide complete and scalable AI

platforms, from TinyML to generative AI, by

bringing discrete NPUs and robust AI software to NXP’s portfolio of processors, connectivity, security and

advanced

analog solutions. For a better experience, download the

block diagram.

Finally, customers can have confidence knowing that Kinara has long been a member of the NXP Partner program and has

already demonstrated successful deployments with NXP applications processors.

Unleashing the Power of Gen-AI Together

By integrating Discrete NPUs to the portfolio, NXP can enable complex Generative AI and large language model tasks

to

run at the edge. Generative AI excels in real-time interpretation and reasoning on image and video, improving

clarity

and decision-making based on high-quality visuals. Ara DNPU devices’ ability to run multimodal LLM models extracting

features and contextual information from images and videos, utilizing text and voice inputs to perform tasks based

on

visual input, provides live analysis for applications such as monitoring the elderly, industrial safety analysis,

factory hazard detection and building or home security.

In retail settings, generative AI creates virtual showrooms or product designs tailored to individual customer

preferences. In manufacturing, DNPU solutions combined with generative AI and agentic AI drive efficiency, precision

and

adaptability, paving the way for innovative, targeted and autonomous applications across diverse industries.

Looking Ahead

The edge is evolving fast, as new AI use cases emerge every day. With the acquisition of Kinara, NXP now offers one

the

industry’s broadest portfolio of edge AI compute options covering

microcontrollers, applications processors and

discrete NPUs, so that customers can access full-stack solutions for any edge application. Through our holistic

offering, customers can reduce development time, minimize system complexity and focus on differentiating their AI

experiences from the competition.

1Omdia Market Radar: AI Processors for the Edge 2024, Alexander Harrowell, 23 April 2025.

²eTOPS = equivalent TOPS.