Safe autonomous systems (SAS) are the “brains” of the autonomous car. Capturing ADAS (advanced driver

assistance systems) information from multiple sensors, the SAS unit can build an environmental model and safely

determine vehicle motion.

One headache car makers have when designing their next safe autonomous systems is the question of distributed versus

centralized processing. Why is this a concern? Cars are becoming smarter, more connected and ever more automated.

Front and rear camera systems are used to detect pedestrians and implement braking functions – surround view

camera systems help automate parking functions, surround radar sensing assists with highway distance control, and

the list goes on.

New cars feature increasing numbers of sensors ranging from ultrasonic, radar, camera and even emerging LIDAR

technologies to build 3D maps of the surrounding environment of the car. In addition to sensor processing (fusion),

safe autonomous systems must build an environmental model upon which a motion planning strategy may be deployed, and

then add an interface to the cloud for over-the-air updates and map data. It’s easy to see how the compute

load inside the car is increasing at an impressive rate.

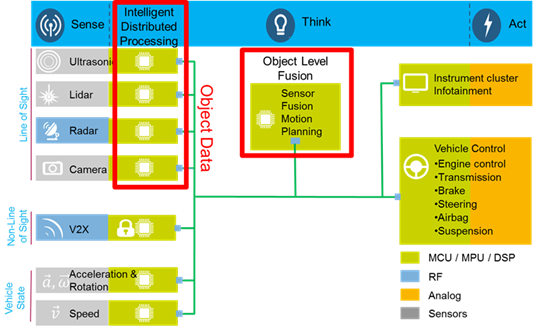

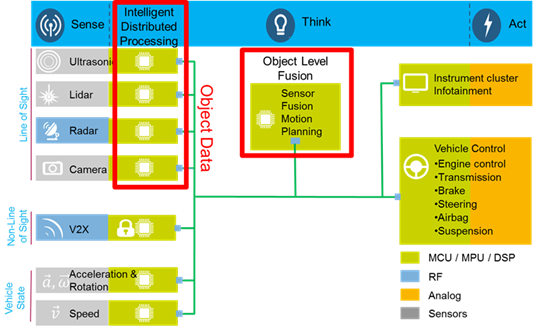

What does this mean in terms of processor topology with respect to the vehicle network? The conventional approach is

to distribute processing of sensed data around the car. Imagine a front camera system that captures pixel data and

turns that into classified object data, such as a detected pedestrian or cyclist. Or another example: an

ultra-wideband radar sensor that turns radar reflections into distance and angle data about the car in front on the

highway, helping you make adjustments to your speed or steering angle. In examples like these, high level

“object” information is passed to the vehicle safe autonomous system ECU to make decisions about speed

regulation, braking and steering. See Figure 1.

Figure 1: Distributed Processing with Object Level Fusion

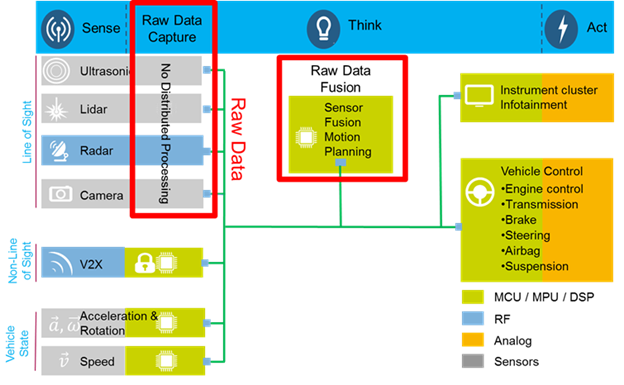

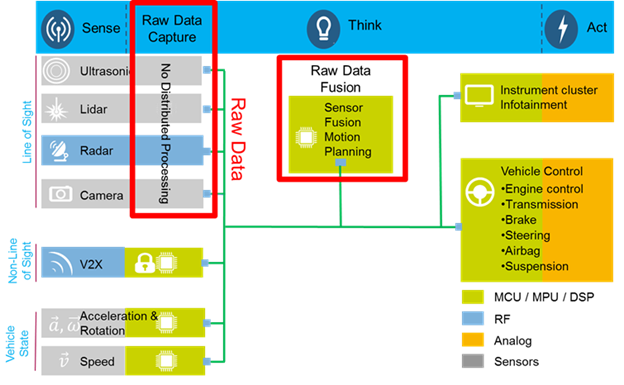

Another topology under consideration by car makers is the centralized processing model. In this case, the camera,

LIDAR or radar sensors capture raw data and passes that raw data to a centrally located high performance machine to

conduct object level processing. This means the raw data analytics (am I seeing a pedestrian or a motor cycle?)

lives in the same compute domain as the motion planning analytics (should I accelerate or brake/steer?). See Figure

2.

Figure 2: Centralized Processing with Raw Data Fusion

Some car makers prefer the centralized model. This way they have access to all the raw data coming from the sensors

and can build algorithms to react to sensed data in a mode that matches the overall dynamic model of the car. On the

other hand, the algorithm integration challenge together with the power and cost required to process such

information centrally is a real headache, especially for mid-range and budget car models where cost and power

consumption is a key design consideration.

However, the distributed processing model allows car makers to offload raw data analytics to tier 1 suppliers for

processing inside their camera or radar ECUs. So the sensor fusion job for the car maker becomes easier to a certain

extent as he only needs to deal with high level “object” data. This means greater cost optimization

and scalability for safe autonomous systems to address the needs of mid- and low-end car models.

In summary, there are many different architectural solutions to the autonomous driving problem. Thanks to a broad

portfolio of safe ADAS sensing and processing solutions, NXP are well positioned to address those architectural

challenges.

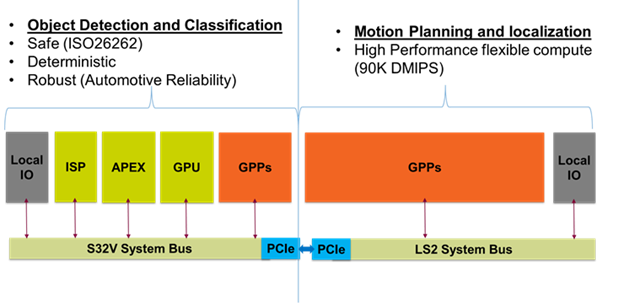

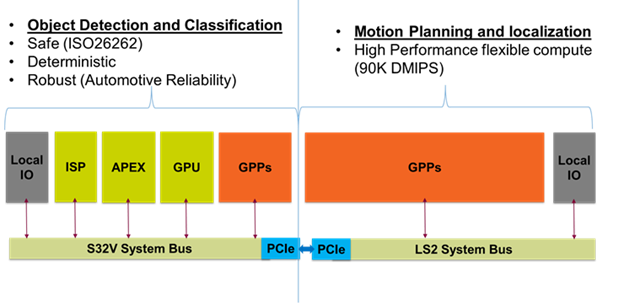

NXP recently announced the “BlueBox”

solution for autonomous driving sensor fusion. The award-winning BlueBox platform includes a highly flexible

general purpose architecture based on ARM Cortex V8-A, with 12 ARM® Cortex® cores (with up to 90,000

DMIPS) and a selection of accelerated IP for ADAS processing (APEX image processor, GC3000 Graphics processor and

ISP Image signal processor). The general purpose processing is provided via two NXP SoCs; the LS208x and the S32V.

See below Figure 3 for a description of BlueBox processing elements.

Figure 3 – BlueBox Processing Elements

The BlueBox solution has the necessary general purpose processing power to address the application processing needs

for distributed and centralized data fusion and subsequent motion planning. Dedicated accelerators for vision

processing together with up to 90,000 DMIPS of general purpose compute capability provide a flexible, scalable

processing solution for autonomous driving.

The LS208x and S32V234 processors included in the BlueBox are sampling today and are scheduled for availability for

mass production as soon as 2017. The out-of-the-box experience is supported by Linux and a wide array of software

development kits, so designers can start developing today for their next generation autonomous driving applications

on real target silicon.

Related links

BlueBox: Autonomous Driving Platform

(S32VLS2-RDB)